🌸 Good Morning - Jevon’s Paradox of LLMs

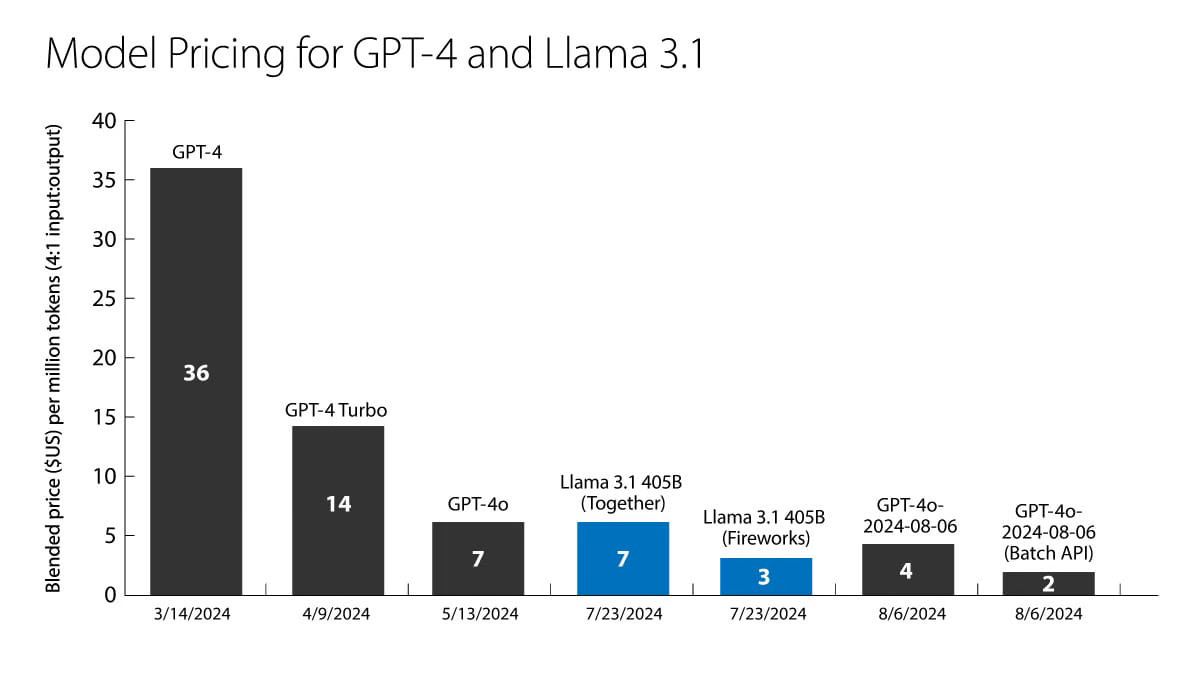

Over the past years, we've witnessed a remarkable 79% reduction in costs, with the cost per million tokens in large language models decreasing even more rapidly than the growth in computing power predicted by Moore’s Law.

Let me take you back to the 19th century when a British economist named William Stanley Jevons (1835-1882) introduced a concept that would later be known as the Jevons Paradox. When James Watt unveiled his efficient steam engine, which used significantly less coal than previous models, many believed that coal consumption would decrease. However, to everyone's surprise, the opposite occurred—coal consumption in the UK soared.

Similarly, consider Apple’s iPod and iTunes, which many thought would simply make music more accessible. Instead, they revolutionized the music industry, leading to an unprecedented surge in music consumption.

That's why we're seeing the widespread adoption of large language models—everyone's buzzing about AI agents.

However, in real-world production, it's not just about deploying a single agent; we need a whole flock of them—100 or more—working together seamlessly. From my experience over the past year, I've noticed that while agent integration succeeds 90-95% of the time, the pipeline often breaks down because full automation is still lacking. This is the real challenge. The company that can crack this 'last mile' will be the next unicorn.