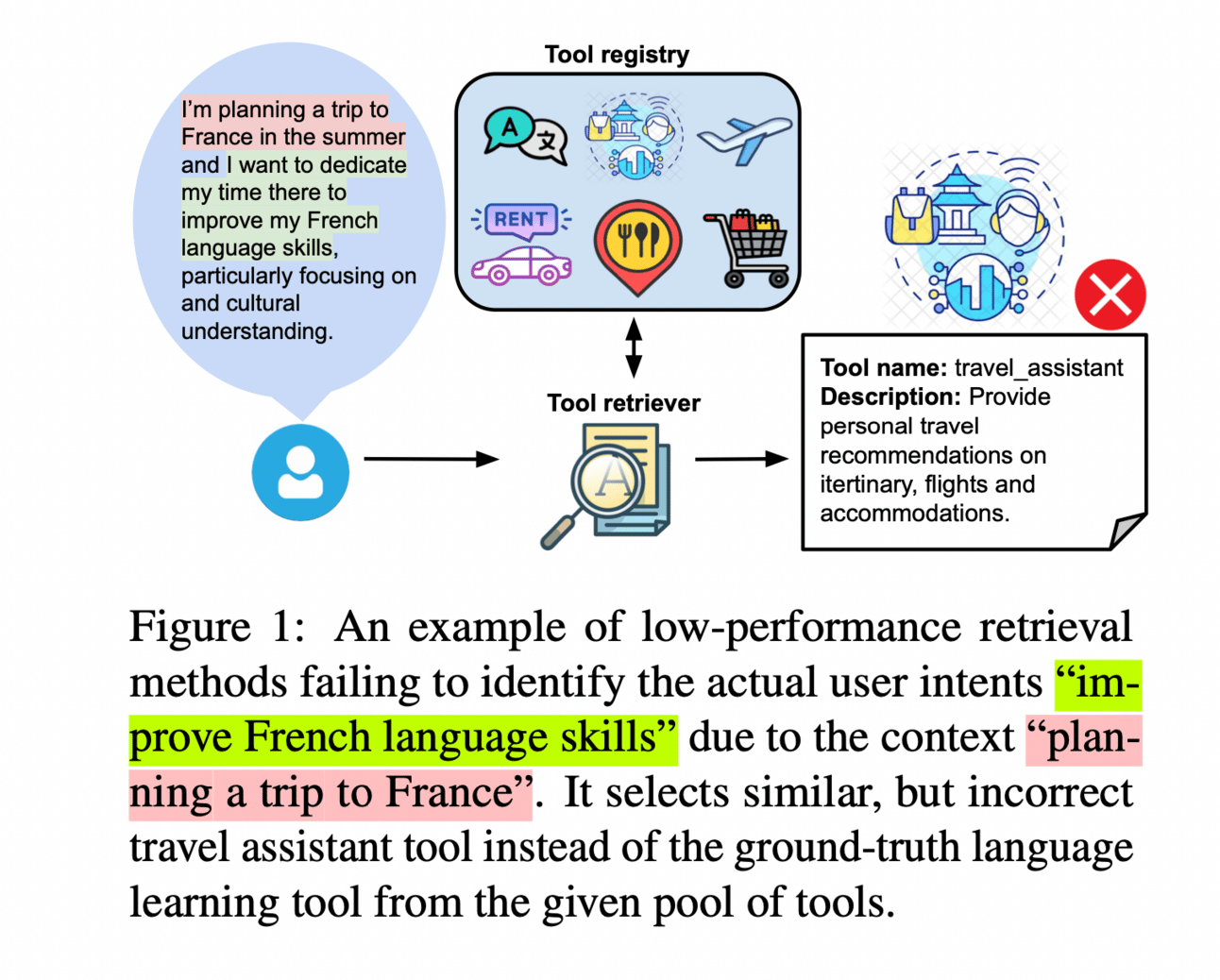

Selecting the appropriate agent from a flock of agents poses a challenge. We need to understand the intent of the prompt and accordingly need to call the specific agent from the agents library.

I have discovered several frameworks after reading couple of research papers—let’s explore them:

🌸 Re-Invoke: Tool Invocation Rewriting for Zero-Shot Tool Retrieval

An unsupervised tool retrieval method form Google.

Phase 1: Generate diverse synthetic queries during the tool indexing phase to cover the query space of each tool document.

Phase 2: Use LLMs to extract the key contexts and intents from user queries during the inference phase.

Phase 3: Implement a multi-view similarity ranking strategy based on intents to identify the most relevant tools.

🌼 Query Generator

The framework instructs the LLMs to re write the user queries by interpreting tool documents.

Multiple synthetic queries have been generated using various temperatures and models.

🌼 Intent Extractor

We have repeatedly encountered a challenge in the chatbot domain where users provide verbose and extraneous information before stating their actual question, complicating the intent identification process. Additionally, users may express multiple intents within a single query, further complicating retrieval efforts.

To address this, the framework leverage LLMs’ reasoning and query understanding capabilities through in-context learning to accurately extract tool-related intentsfrom complex user queries.

Once the intents are extracted, they replace the original user queries in the embedding space during tool retrieval. This approach enables the retrieval system to recommend all relevant tools for each identified intent, significantly enhancing retrieval accuracy.

🌼Multi-view Similarity Ranking

As each extracted intent from user queries could retrieve different relevant tools, The framework introduce a multi-view similarity ranking method to consider all tool-related intents expressed in the query.

This method aggregates similarity scores between each intent and the corresponding expanded tool document. By incorporating multiple perspectives within the embedding space, it provides a robust measure of relevance.

🌸 Agent Q: Advanced Reasoning and Learning for Autonomous AI Agents

A group of researchers from the Stanford university propose a framework that combines guided Monte Carlo Tree Search (MCTS) with a self-critique mechanism + iterative fine-tuning on agent interactions.

In the simulated WebShop e-commerce platform, where it consistently outperforms behavior cloning and reinforced fine-tuning baselines, and even surpasses average human performance when equipped with online search capabilities. In real-world booking scenarios, the methodology has dramatically improved the Llama-3 70B model’s zero-shot performance, boosting success rates from 18.6% to 81.7% after just a single day of data collection, and further to 95.4% with online search capabilities.

🌼Agent Formulation

In this approach, they consider a general “Partially Observable Markov Decision Process (POMDP) setup” characterized by components:

Observation space (𝒪)

Unobserved state space (𝒮)

Action space (𝒜)

Transition distribution (𝑇)

Reward function (𝑅)

Initial state distribution (𝜇0)

Discount factor (𝛾).

The agent employs a ReAct base strategy enhanced by preliminary planning steps (PlanReAct). The agent’s actions are composite, consisting of sequential phases:

Planning: The agent starts with generating a planned sequence of actions based on the initial observation.

Reasoning: Each action involves a reasoning step to evaluate and adapt the plan.

Environment Interaction: Actions specific to browser interactions, such as clicking or typing, are generated based on the reasoned plan.

Explanation: Post-interaction, the agent generates an explanatory action detailing the rationale behind its decisions.

These actions are modeled as a tuple (plan, thought, environment, explanation) for the initial step and (thought, environment, explanation) for subsequent steps.

🌼Monte-Carlo Tree Search Over Web-Pages

The Monte Carlo Tree Search (MCTS) algorithm employed in this study. It is structured around four integral phases: selection, expansion, simulation, and backpropagation.

These phases collectively aim to balance the dual objectives of exploration and exploitation, iteratively refining the agent’s policy for more effective decision-making.

For the web agent, they implement tree search across web pages, where each state in the search tree is defined by a summary of the agent’s historical interactions and the Document Object Model (DOM) tree of the current web page. This setup differs significantly from the more constrained environments of board games like Chess or Go, because the web-agent’s action space is open-format and highly variable.

Instead of a fixed strategy, They employ the base model as an action-proposal distribution, sampling a fixed number of possible actions at each node, or web page.

🌸 Enhancing Decision-Making for LLM Agents via Step-Level Q-Value Models

The researchers of National University of Defense Technology, Changsha, China and State Key Laboratory of Complex & Critical Software Environment propose a novel approach that employs a task-relevant Q-value model to guide action selection throughout the decision-making process.

The methodology begins with the collection of decision-making trajectories annotated with step-level Q-values, utilizing Monte Carlo Tree Search (MCTS) to construct detailed preference data. Subsequently, they employ another LLM to fit these preferences using Direct Policy Optimization (DPO), which then functions as the Q-value model.

During inference, LLM agents use the highest Q-value to select actions before interacting with the environment, ensuring that each decision maximizes the anticipated reward based on past learning.

The Q-value models not only boost performance but also offer several advantages, including the ability to generalize across different LLM agents and integrate seamlessly with existing prompting strategies.

This approach marks a substantial advancement in the field, demonstrating that strategic enhancements in decision-making frameworks can profoundly improve LLM agents’ effectiveness in complex, multi-step tasks.