The Last Friday marks a pivotal moment in Brussels, a beautiful city. On this day, the European Union's groundbreaking AI regulatory proposals will advance into their crucial final stage. This landmark legislation, a first of its kind globally, seeks to rigorously regulate artificial intelligence based on its potential for harm.

The process now moves into the decisive 'trilogies' phase, where the EU Parliament, Commission, and Council will collaboratively finalize the bill's contents, paving the way for its integration into EU law. This represents a significant and high-stakes step in the legislative journey.

The intention of the bill is excellent but whether the execution of the bill is good or bad for the community - let’s look into it deeply.

Macron “attacked the new Artificial Intelligence Act agreed last Friday, saying: “We can decide to regulate much faster and much stronger than our major competitors. But we will regulate things that we will no longer produce or invent. This is never a good idea.”

🌻 🎯 Edition 22: Embracing AI - A Strategic Roadmap for Business Leaders - Part 2

Jumping into AI without a plan is like setting sail without a compass; you can easily drift off course. Right now, there’s a tide of companies already navigating these waters, harnessing the winds of AI to push them ahead. If you’re still on the dock, it’s urgent that you chart your course and set sail. Today, we’ll give you a deci…

🦚 Snapshot

The European Union's Artificial Intelligence Act of 2023 is a pioneering law aimed at regulating AI technologies. Key points include:

The Basics:

Purpose: To ensure AI safety, transparency, and respect for fundamental rights.

Risk Classification: Prohibited AI » High-Risk AI »Limited Risk AI » Minimal risk AI

Governance: Establishment of national and EU-level bodies for oversight and enforcement.Compliance Grace Periods between 6-24 months.

Prohibitions: Ban high-risk AI practices, like manipulative behavior and mass surveillance.

Transparency: Obligation for certain AI systems to disclose their AI-generated nature.

Global Impact: Expected to influence worldwide AI regulation standards.

Legislative Process: Involves trilogue negotiations among key EU institutions.

Implementation: Likely adoption in early 2024, with full effect expected between late 2025 and early 2026.

Exemptions: National Security, Military and Defence, R&D, Open Source(Partial)

Prohibited AI:

Social credit scoring systems.

Emotion recognition systems at work and in education.

Al used to exploit people's vulnerabilities (e.g., age, disability).

Behavioral manipulation and circumvention of free will.

Untargeted scraping of facial images for facial recognition.

Biometric categorization systems using sensitive characteristics.

Specific predictive policing applications.

Law enforcement use of real-time biometric identification in public (apart from in limited, pre-authorized situations).

High-Risk AI:

Medical devices, Vehicles.

Recruitment, HR, and worker management.

Education and vocational training.

Influencing elections and voters.

Access to services (e.g., insurance, banking, credit, benefits, etc.).

Critical infrastructure management (e.g., water, gas, electricity, etc.

Emotion recognition systems.

Biometric identification.

Law enforcement, border control, migration, and asylum.

Administration of justice.

Specific products and/or safety components of specific products

This Act marks a significant step in establishing legal frameworks for AI, balancing innovation with ethical and public welfare concerns.

Source:

🐣 LLM(read it GPAI) and Flop Games

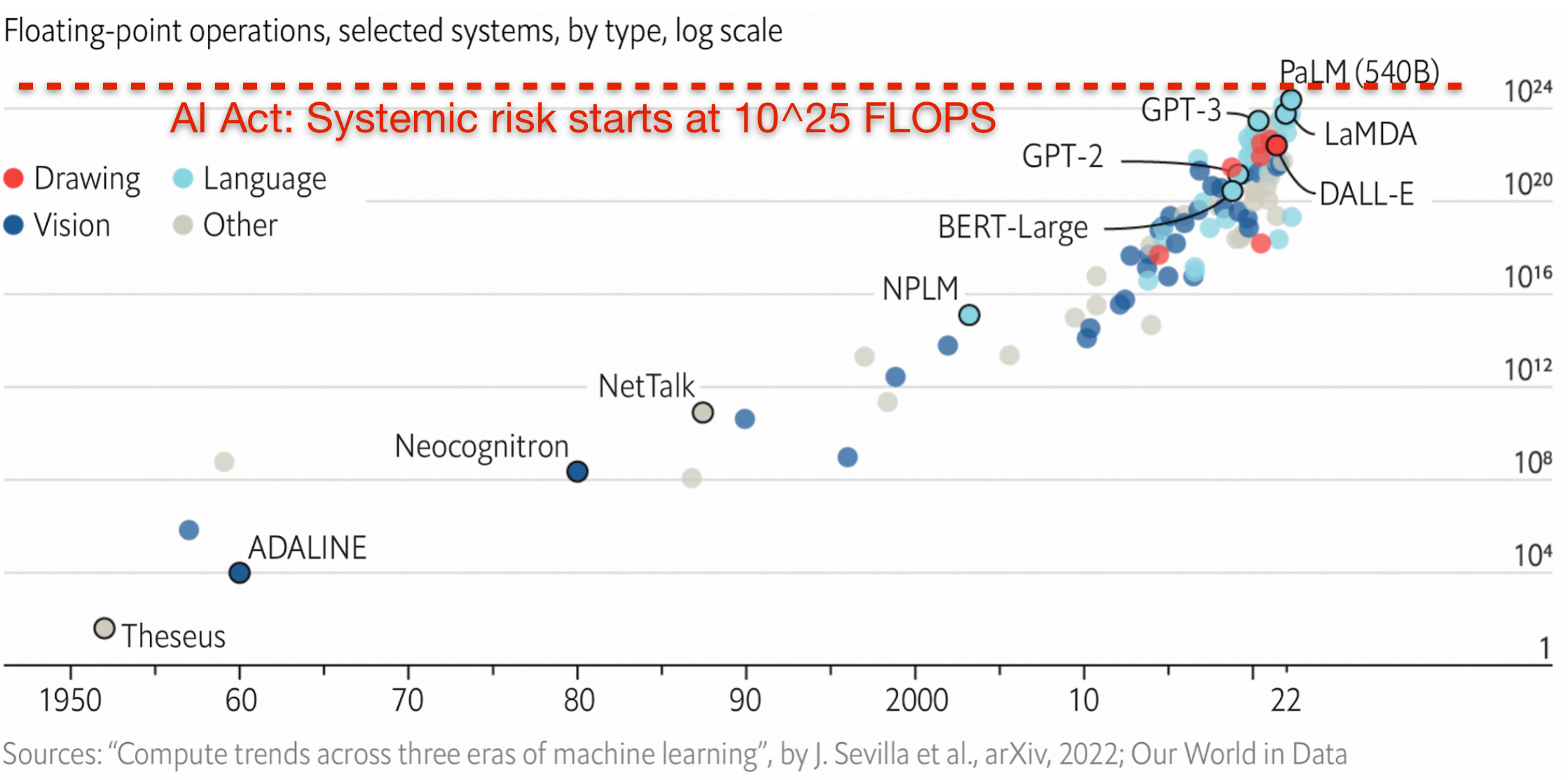

The GPAI has implemented an automatic classification protocol for systems deemed to pose 'systemic risk', contingent upon their computational capacity exceeding 10^25 Floating Point Operations per Second (FLOPs).

Presently, this classification primarily pertains to advanced models such as GPT-4 and Gemini. We are unsure about the GPT4 the parameters count and flop details - Assumptions are - They are using multiple smaller models.

However, within the forthcoming two-year period, it is anticipated that a diverse array of models, encompassing both proprietary and open-source platforms, will fall under this categorization.

Commission can designate other GPAI as "systemic risk" based on other criteria such as several business users, end users, modalities, etc. But this designation is not automatic. GPAI with systemic risk have obligations for testing and evaluation requirements (self-assessment). External red-teaming and cyber security are required.

The models are required to comply with copyright directives. They also need to fulfill obligations for non-systemic risk GPAI. What are those requirements? Not clear and transparent.

Technical documentation (incl. model cards) made available if AI Office or national supervisory authorities come knocking. This includes information on the training data set, infrastructure, computational resources used, info on training, testing, etc. We already have the open-source data and open-source code details. The community is looking for a GPU cluster to play with the variations but not mentioned in the draft.

They also need to make a similar document available to those downstream in the supply chain. In addition, a detailed summary of the content used to train GPAI (as generative AI) is required. This summary should not be too technical and should be understandable for people.

The AI Office is expected to provide a template so that GPAI providers provide this detailed summary in an understandable format. This information can then be used by copyright holders to opt out as the EU copyright directive allows.

The commission has to also review the energy efficiency of GPAI regularly and propose measures or actions. There is an open-source exemption. The Open-source GPAI models (not systems) would have to fulfill copyright-related requirements and if they are "systemic risk".

"The legislation ultimately included restrictions for foundation models but gave broad exemptions to “open-source models,” which are developed using code that’s freely available for developers to alter for their own products and tools. The move could benefit open-source AI companies in Europe that lobbied against the law, including France’s Mistral and Germany’s Aleph Alpha, as well as Meta, which released the open-source model LLaMA."

🌻 Edition 19b: Learn ReAct - Advanced Way of Prompting - Debugging - Less Hallucination

Imagine having a conversation with someone, and just when you think you've said it all, you dive deeper into the topic, unraveling new layers of insight. It's like a dance of words and ideas. Well, guess what? Large language models (LLMs) like the ones we're going to discuss today have a similar knack. They don't just talk; they h…

✨ Observations

The EU needs to understand that people want an industry that works, and regulation can be required to make sure this exists. But nobody is clamoring for regulation in the absence of industry. The Chatgpt is a year old and the industry is trying to decide the best architecture. The community is not looking for the early RED TAPE.

I live in the EU and have seen that a lot of paperwork and extra infrastructure is required to integrate GDPR. And it is still not mature enough.

The act will be an extra responsibility to integrate GEN AI into the Fintech Industry. They need to take a couple of complicated regulations - It will create extra complications for the fintech industry.

Other countries like UAE/ Japan have better corporate laws favorable to the LLM Industry (corporate laws, data transparency). Europe will use soon the Non-European LLM Model that will be trained on the European Data.

AI regulation office in Europe - What is that?

Ending the Blog with a post from the Guardian.

Sadly, the Franco-German-Italian volte face has a simpler, more sordid, explanation: the power of the corporate lobbying that has been brought to bear on everyone in Brussels and European capitals generally. And in that context, isn’t it interesting to discover (courtesy of an investigation by Time that while Sam Altman (then and now again chief executive of OpenAI after being fired and rehired) had spent weeks touring the world burbling on about the need for global AI regulation, behind the scenes his company had lobbied for “significant elements of the EU’s AI act to be watered down in ways that would reduce the regulatory burden on the company”, and had even authored some text that found its way into a recent draft of the bill.

So, will the EU stand firm on preventing AI companies from marking their own homework? I fervently hope that it does. But only an incurable optimist would bet on it.

**

I will publish the next Edition on Thursday.

This is the 24th Edition, If you have any feedback please don’t hesitate to share it with me, And if you love my work, do share it with your colleagues.

It takes time to research and document it - Please be a paid subscriber and support my work.

Cheers!!

Raahul

**