🌻 Edition 25: Can we use LLM to improve the AppSec? 🔒🎄

Merry Christmas 🎄

Over the last 12 months, most teams have asked themselves “How can we leverage Gen AI to improve what we are doing”? For AppSec leaders though, the immediate problem statement was about the secure usage of Gen AI. As the dust settles on that (we at least understand the risks, even if we have not entirely figured out how to manage them), the focus needs to shift to how to leverage Gen AI to solve existing Security challenges. This post specifically focuses on AppSec, but there are plenty of use cases in other areas of Security too.

There are already some amazing resources on what Security problems can be solved using Gen AI. We also have companies trying to build code-scanning and pen-testing tools leveraging Gen AI. The question worth asking is this: In what areas can Gen AI make a 10x difference from existing solutions?

The focus of this post is to help define a framework that can be used by Security leaders to make significant improvements in their AppSec program.

🎄 Hypothesis

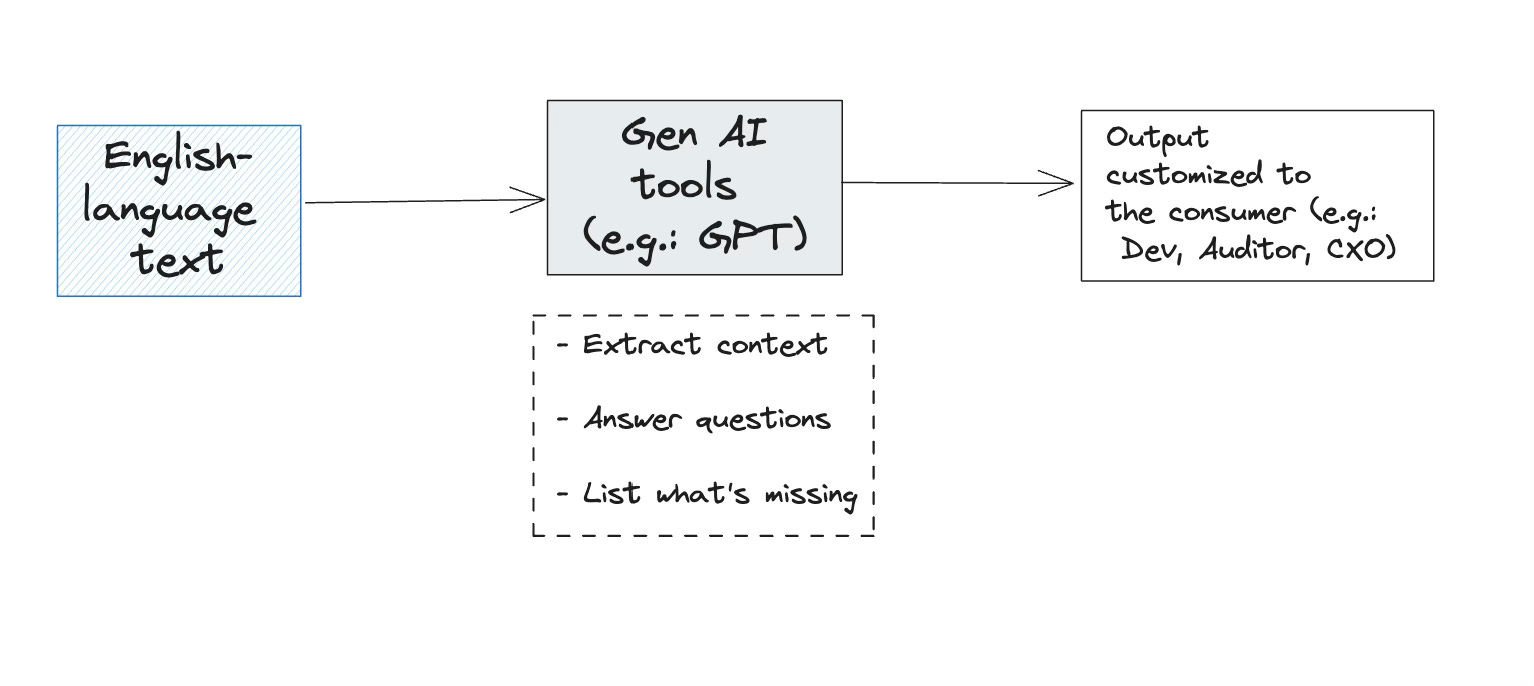

Many AppSec tasks require us to consume content written in English (or other spoken languages), analyze it, and respond in English (think design reviews, risk assessments, process exception approvals, etc.). These tasks are done manually as current tooling processes machine-readable languages (python code, HTTP traffic, etc.). With Gen AI, we have an opportunity to automate these tasks and make a 10x improvement over current alternatives.

As the co-founder of Seezo, Sandesh is trying to solve Cyber Security challenges using Gen AI. Before this, Sandesh spent a decade in various cybersecurity roles including as the head of Security at Razorpay, India's largest payment gateway company. In his spare time, Sandesh enjoys running, birding, and trying street food in every city he visits :)

🎄 The framework

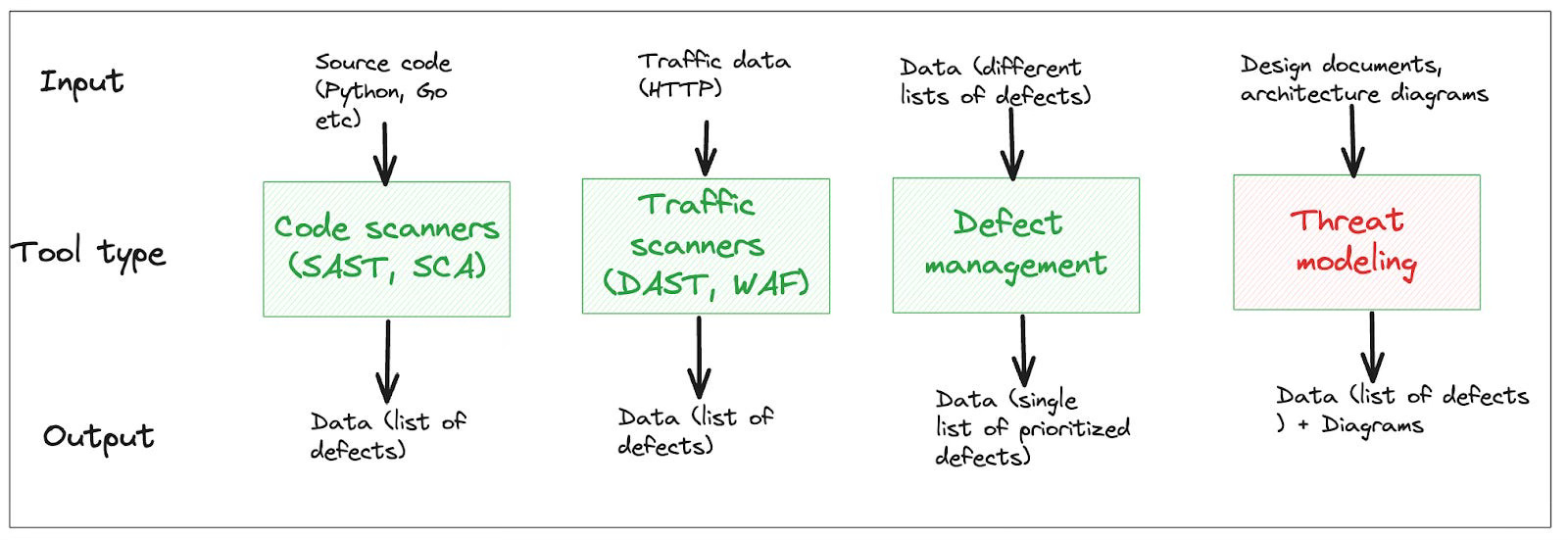

There are many ways to categorize existing tools by the type of input they process and the type of output they generate.

In the first 3 examples, the input is structured data, which can be contextualized easily. For instance, SAST tools deal with a finite set of programming languages that need to be parsed/analyzed to detect insecure patterns. A DAST tool needs to understand the structure of HTTP traffic to detect defects. Defect management tools receive multiple lists of defects in a structured format (CSV, XML, etc.) as input and spit out a single, prioritized list as output (also structured).

This breaks down where the input is in a regular language (e.g.: English). For instance, input to a threat modeling tool is typically a design document or an architecture diagram. The documents are written in regular languages (e.g.: English) and don’t follow a structure that can be “scanned” easily. In other words, while SAST tools could use things like abstract syntax trees (AST) to extract structure and context from code, threat modeling cannot simply extract context from a design document. To make up for this deficiency, threat modeling tools rely on assessors providing context (filling forms, drawing diagrams, and so on).

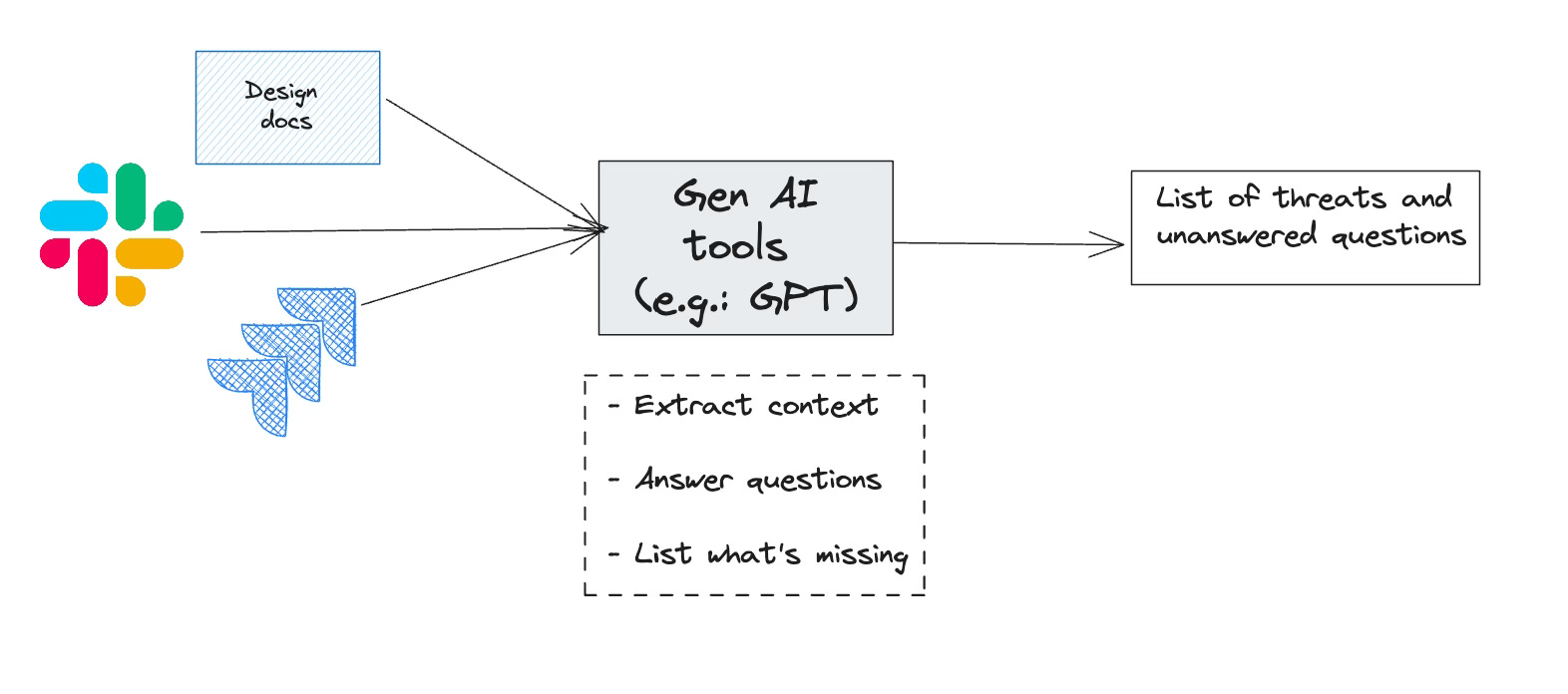

Gen AI tools are capable of reading input in English, understanding context, and responding to the audience in a format they prefer. AppSec teams can leverage this to automate tasks where we need to extract context from content written in English (design docs, Jira tickets, Slack conversations) to produce meaningful outputs.

🐱 The 7th Edition : Want to Hack Llama2?

Its raining cats and dogs here and I have just finished reading this great research paper: Universal and Transferable Adversarial Attacks on Aligned Language Models. I know you are using chatgpt to design cocktails - but are they safe, let’s look into it.

🎄 Use cases

Using the above framework, here are a few use cases to think about:

✨ Threat Modeling

For decades now, Security experts have believed in the power of threat modeling. The process of reviewing artifacts such as design documents, architectural diagrams, etc., to provide a list of possible, context-specific threats. Typically, threat modeling involves reading documents, interviewing stakeholders (engineering, product, etc.), interpreting architecture diagrams, and so on. While experts largely agree on the usefulness of threat modeling, most of the work is performed manually by highly skilled (and expensive) security engineers. This does not scale well. This means, that companies only perform threat modeling on critical applications, ignore it altogether, or build a large team of engineers/consultants to do the job (also called “throwing people at the problem”).

With Gen AI, we have an opportunity to fix that. We can use APIs (e.g. GPT 4T) to read documents, interpret architecture diagrams, and come up with a list of recommendations in line with a company’s security standards.

✨ Delivering security standards better

A good way to avoid whole classes of defects is by implementing secure coding standards across your org. There are three parts to this: Writing the standards, communicating them to developers, and enforcing them. While we have made excellent progress on the first part (writing the standards), we have failed on the other 2 as an industry. Developers have no interest in consulting a 40-page document on how to encrypt sensitive data when they write code (most devs in large companies may not even know that such a guide exists).

With Gen AI, this can change. Imagine if we can deliver specific secure coding guidelines, as security requirements to developers. These will not be the entire text, but only the ones that are relevant to the feature they are building. We can achieve this by having Gen AI tools map the feature they are building (reading the details in Jira, design doc, etc.) to relevant sections of the organization’s security standards.

✨ Vendor risk management

If I had to bet all the money in my wallet, against all the money in your wallet, I would bet that you will never find a security executive or a software business owner who is happy with the way we do vendor risk management. Those vendor risk questionnaires with hundreds of questions are supposed to help you decide if a vendor should be on-boarded or not. In most cases, this is just a formality that delays the process for buyers and does not provide meaningful context to the security team.

How about this: Ask the vendor for all the documents they can provide about their company. Have an LLM consume it and automatically answer your questionnaire. Once completed, send it to the vendor for an attestation. If some of the answers are unclear or missing, ask them to fill those out.

I am not entirely confident that this will improve our security posture, but this will save thousands of valuable hours that can be used for other meaningful tasks.

✨ [Not AppSec] Cyber risk assessments

Large organizations routinely perform risk assessments of their initiatives (e.g.: Performing a risk assessment of our strategy to move from AWS to a multi-cloud strategy). Can we replace expensive consultants with an API that takes a first pass? Most of the work is having a security expert read documents, ask relevant questions, and publish their findings. It is worth exploring if Gen AI tools can do most of this.

I recently quit my job and started Seezo with a friend to solve Product Security problems using Gen AI. Some of the ideas outlined in this post forms the basis of the product we are building. If you want to be the first to hear about what we build, please sign up at https://seezo.io

🎄 A few caveats

✨ Accuracy

We should not expect 100% accuracy on day one. The possibility of hallucination and incomplete information can mean that the quality of the tooling is suboptimal. We must rely on manual triaging to spot-check results (at least in the short term).

✨ Replacing humans

Don’t expect Gen AI to replace your seasoned AppSec expert (and no, I am not just saying this to preserve my tribe :) ). What it can do is give your AppSec team superpowers. What needed days can now get done in hours. For this alone, it’s worth making sure your team is well-versed with the latest in Gen AI. This does raise larger questions on what entry-level AppSec engineers should focus on (given a lot of the work they do can be replaced by Gen AI). But that’s a question for a different day (and possibly a different author :)).

✨ Dependence on 3rd party LLMs

Depending on how the LLM ecosystem shapes up, we may have to rely on 3rd party, closed-source models such as Open AI to build these solutions. This may lead to a situation where the entirety of your solution breaks down if one company makes breaking changes (or encounters boardroom drama). This leads to a classic build v/s buy dilemma. For now, given how expensive (time, skill, money) it is to build/train/fintune OSS models, “buy” may be the winning strategy for your company. If your organization has the resources to leverage open-source models, that may be worth considering.

✨ Skills needed

This post focuses on the “what”, but not the “how”. To build these solutions, we need team members skilled at understanding and building with Gen AI. In the short term, this may need bolstering your team with ML engineers or leveraging internal ML teams (if your company has them). Again, this is easier said than done given the supply of such talent is far lesser the demand.

⛰️ Edition 13: Everything about the new beast H100 Part 1

Today I will talk about the NVIDIA h100 Architecture. 🏛 NVIDIA Hopper Architecture In-Depth 🏭 Manufacturing Process 🤑 Profit, Economy and Competition 📈 Projected Revenue ☁️ Cloud Offering ⛷️ Other Players ⚡️ Tesla and H100 🔌 Power Supply of the H100

🎄 Conclusion

That’s it for today! Are there other Gen AI use cases for AppSec that are missing from the post? Are there interesting initiatives you have seen in action? Tell me more! You can drop me a message on Twitter (or whatever it is called these days), LinkedIn, or email. You can also follow along on what our company is building at seezo.io. If you find this newsletter useful, share it with a friend, or colleague, or on your social media feed.

**

I will publish the next Edition on Sunday.

This is the 25th Edition, If you have any feedback please don’t hesitate to share it with me, And if you love my work, do share it with your colleagues.

It takes time to research and document it - Please be a paid subscriber and support my work.

Cheers!!

Raahul

**